Abstract

This project develops privacy mechanisms for clinical video observation sessions, specifically focusing on mechanisms that protect the facial identity of the child under observation while retaining gaze and expression based information which is critical for diagnostic assessments.

We acknowledge funding from: NIH R21 “Protecting the privacy of the child through facial identity removal in recorded behavioral observation sessions” (2020-2022).

Outreach and Dissemination

SIGGRAPH Spotlight: Episode 39 – Deepfakes

For the latest episode of SIGGRAPH Spotlight, SIGGRAPH 2021 Technical Papers Chair Sylvain Paris (fellow, Adobe Research) is joined by a group of the computer graphics industry’s best and brightest to tackle the subject of deepfakes — from the history of deepfake tech through to how it’s being used today. Press play to hear insight in two parts from Chris Bregler (sr. staff scientist, Google AI), Eakta Jain (assistant professor, University of Florida), and Matthias Nießner (professor, TU München) [Part 1 – 0:00:34], and from ctrl shift face (independent artist) [Part 2 – 0:52:55]. https://blog.siggraph.org/2020/12/siggraph-spotlight-episode-39-deepfakes.html/

Publications

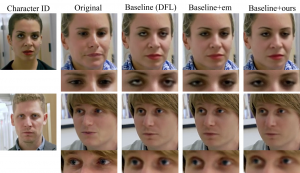

Towards mitigating uncann(eye)ness in face swaps via gaze-centric loss terms

“Towards mitigating uncann(eye)ness in face swaps via gaze-centric loss terms”, Ethan Wilson, Frederick Shic, Joerg, Sophie, Eakta Jain. Computers and Graphics Journal Special Issue: Eye Gaze Visualization, Interaction, Synthesis, and Analysis. In press.

- Paper (preprint)

- Bibtex:

@article{wilson_uncanneyeness_2024,

author = {Ethan Wilson and Frederick Shic and Sophie Jörg and Eakta Jain},

title = {Towards mitigating uncann(eye)ness in face swaps via gaze-centric loss terms},

year = {2024},

journal = {Computers & Graphics},

doi = {https://doi.org/10.1016/j.cag.2024.103888},

}

Introducing Explicit Gaze Constraints to Face Swapping

“Introducing Explicit Gaze Constraints to Face Swapping”, Ethan Wilson, Frederick Shic, Eakta Jain, ACM Symposium on Eye Tracking Research & Applications (ETRA 23).

- Paper (preprint)

- Bibtex:

@inproceedings{wilson_explicitgaze_2023,

author = {Wilson, Ethan and Shic, Frederick and Jain, Eakta},

title = {Introducing Explicit Gaze Constraints to Face Swapping},

year = {2023},

publisher = {Association for Computing Machinery},

booktitle = {2023 Symposium on Eye Tracking Research and Applications},

series = {ETRA '23}

}

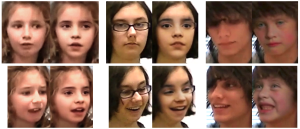

Practical Digital Disguises

“Practical Digital Disguises: Leveraging Face Swaps to Protect Patient Privacy”, Ethan Wilson, Frederick Shic, Jenny Skytta, Eakta Jain, arXiv preprint arXiv:2204.03559, 2022.

@misc{wilson_digitaldisguises_2022,

author = {Wilson, Ethan and Shic, Frederick and Skytta, Jenny and Jain, Eakta},

title = {Practical Digital Disguises: Leveraging Face Swaps to Protect Patient Privacy},

publisher = {arXiv},

year = {2022},

}

The Uncanniness of Face Swaps

“The Uncanniness of Face Swaps”, Ethan Wilson, Aidan Persaud, Nicholas Esposito, Sophie Joerg, Rohit Patra, Jenny Skytta, Frederick Shic, Eakta Jain, Journal of Vision, 2022.

Abstract: Face swapping algorithms, popularly known as “deep fakes”, generate synthetic faces whose movements are driven by an actor’s face. To create face swaps, users construct training datasets consisting of the two faces being applied and replaced. Despite the availability of public code bases, creating a compelling, convincing face swap remains an art rather than a science because of the parameter tuning involved and the unclear consequences of parameter choices. In this paper, we investigate the effect of different dataset properties and how they influence the uncanny, eerie feeling viewers experience when watching face swaps. In one experiment, we present participants with video from the FaceForensics++ Deep Fake Detection dataset. We ask them to score the clips on bipolar adjective pairs previously designed to measure the uncanniness of computer-generated characters and faces within three categories: humanness, eeriness, and attractiveness. We find that responses to face swapped clips are significantly more negative than to unmodified clips. In another experiment, participants are presented with video stimuli of face swaps generated using deepfake models that have been trained on deficient data. These deficiencies include low resolution images, lowered numbers of images, deficient/mismatched expressions, and mismatched poses. We find that mismatches in resolution, expression, and pose and deficient expressions all induce a higher negative response compared to using an optimal training dataset. Our experiments indicate that face swapped videos are generally perceived to be more uncanny than original videos, but certain dataset properties can increase the effect, such as image resolution and quality characteristics including expression/pose match-ups between the two faces. These insights on dataset properties could be directly used by researchers and practitioners who work with face swapping to act as a guideline for higher-quality dataset construction. The presented methods additionally open up future directions for perceptual studies of face swapped videos.

@article{wilson2022uncanniness,

title={The Uncanniness of Face Swaps},

author={Wilson, Ethan and Persaud, Aidan and Esposito, Nicholas and Joerg, Sophie and Patra, Rohit and Shic, Frederick and Skytta, Jenny and Jain, Eakta},

journal={Journal of Vision},

volume={22},

number={14},

pages={4225--4225},

year={2022},

publisher={The Association for Research in Vision and Ophthalmology}

}

Annotation System For Aiding Automatic Face Detectors

“Annotation System For Aiding Automatic Face Detectors”, Ethan Wilson, Jenny Skytta, Frederick Shic, Eakta Jain, University of Florida Technical Report, IR00011535, 2021.

- Paper

- Bibtex:

@techreport{wilson_annotation_2021,

title = {Annotation System For Aiding Automatic Face Detectors},

author = {Ethan Wilson and Jenny Skytta and Frederick Shic and Eakta Jain},

year = {2021},

institution = {University of Florida}

}

Benchmarking Face Detectors

“Benchmarking Face Detectors”, Ethan Wilson, Jenny Skytta, Frederick Shic, Eakta Jain, University of Florida Technical Report, IR00011536, 2021.

- Paper

- Bibtex:

@techreport{wilson_benchmarking_2021,

title = {Benchmarking Face Detectors},

author = {Ethan Wilson and Jenny Skytta and Frederick Shic and Eakta Jain},

year = {2021},

institution = {University of Florida}

}