Measuring Behavioral and Physiological Responses in Videos and VR

Abstract

Videos are very powerful at eliciting strong emotional responses from viewers. We are interested in measuring and understanding these responses. We are also interested in measuring responses from viewers in Virtual Reality.

Projects

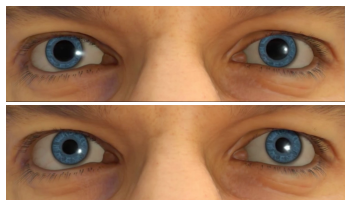

Pupil as a Perceptual Cue

- Paper (Preprint) (PDF – 8.2MB)

- Bibtex entry:

@article{dong2022avatar,

author = {Dong, Yuzhu and Joerg, Sophie and Jain, Eakta},

title = {Is the Avatar Scared? Pupil as a Perceptual Cue},

journal = {Computer Animation and Virtual Worlds},

volume = {(in press)},

number = {},

pages = {},

doi = {https://doi.org/10.1002/cav.1421},

year = {2022}

}

Comparison of Kent and Viewport methods for our dataset and Salient360!

A Benchmark of Four Methods for Generating 360◦ Saliency Maps

from Eye Tracking Data

“A Benchmark of Four Methods for Generating 360◦ Saliency Maps from Eye Tracking Data”, Brendan John, Pallavi Raiturkar, Olivier Le Meur, Eakta Jain, IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), 2018. Selected as one of the top 4 out of 58 accepted papers invited to submit an extended version to the International Journal of Semantic Computing.

- Paper (preprint) (PDF – 1.8MB)

- Code (ZIP)

- Bibtex entry:

@inproceedings{john2018,

author = {John, Brendan and Raiturkar, Pallavi and Banerjee, Arunava and Jain, Eakta},

title = {A Benchmark of Four Methods for Generating 360◦ Saliency Maps from Eye Tracking Data},

booktitle = {IEEE International Conference on Artificial Intelligence and Virtual Reality},

year = {2018},

organization = {IEEE}

}

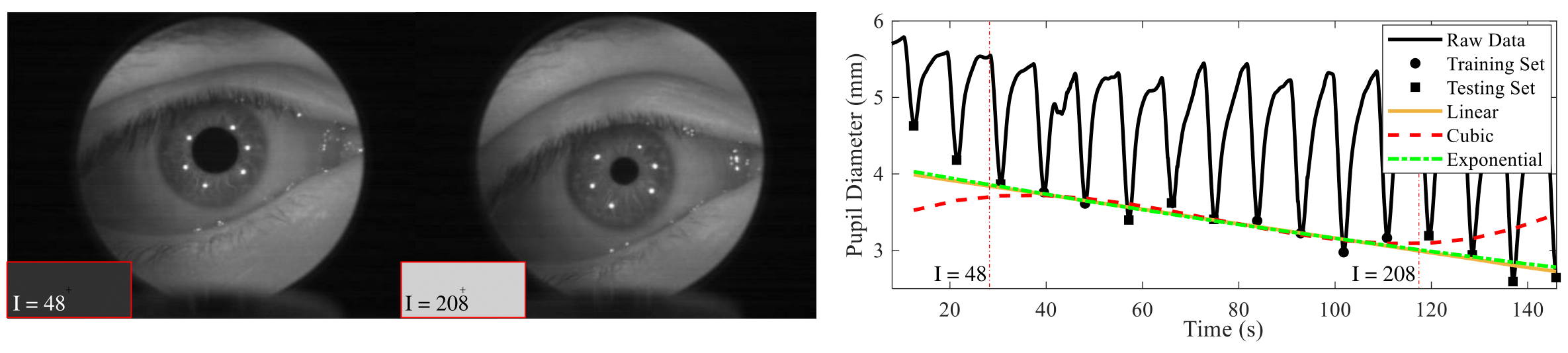

Pupillary light response in VR for two different grayscale intensities.

An Evaluation of Pupillary Light Response Models

for 2D Screens and VR HMDs

“An Evaluation of Pupillary Light Response Models for 2D Screens and VR HMDs”, Brendan John, Pallavi Raiturkar, Arunava Banerjee, Eakta Jain, ACM Symposium on Virtual Reality Software and Technology (VRST), 2018.

- Paper (free download through ACM Author-Izer)

An evaluation of pupillary light response models for 2D screens and VR HMDs

Brendan John, Pallavi Raiturkar, Arunava Banerjee, Eakta Jain

An evaluation of pupillary light response models for 2D screens and VR HMDs

Brendan John, Pallavi Raiturkar, Arunava Banerjee, Eakta Jain

VRST ’18 Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, 2018 - Paper (preprint) (PDF – 3MB)

- Code (ZIP)

- Data (ZIP)

- Stimuli (ZIP)

- Teaser video (MP4 – 21.7MB)

- Bibtex entry:

@inproceedings{johnandraiturkar2018,

author = {John, Brendan and Raiturkar, Pallavi and Banerjee, Arunava and Jain, Eakta},

title = {An Evaluation of Pupillary Light Response Models for 2D Screens and VR HMDs},

booktitle = {Proceedings of the ACM Symposium on Virtual Reality Software and Technology},

year = {2018},

doi = {10.1145/3281505.3281538},

publisher = {ACM}

}

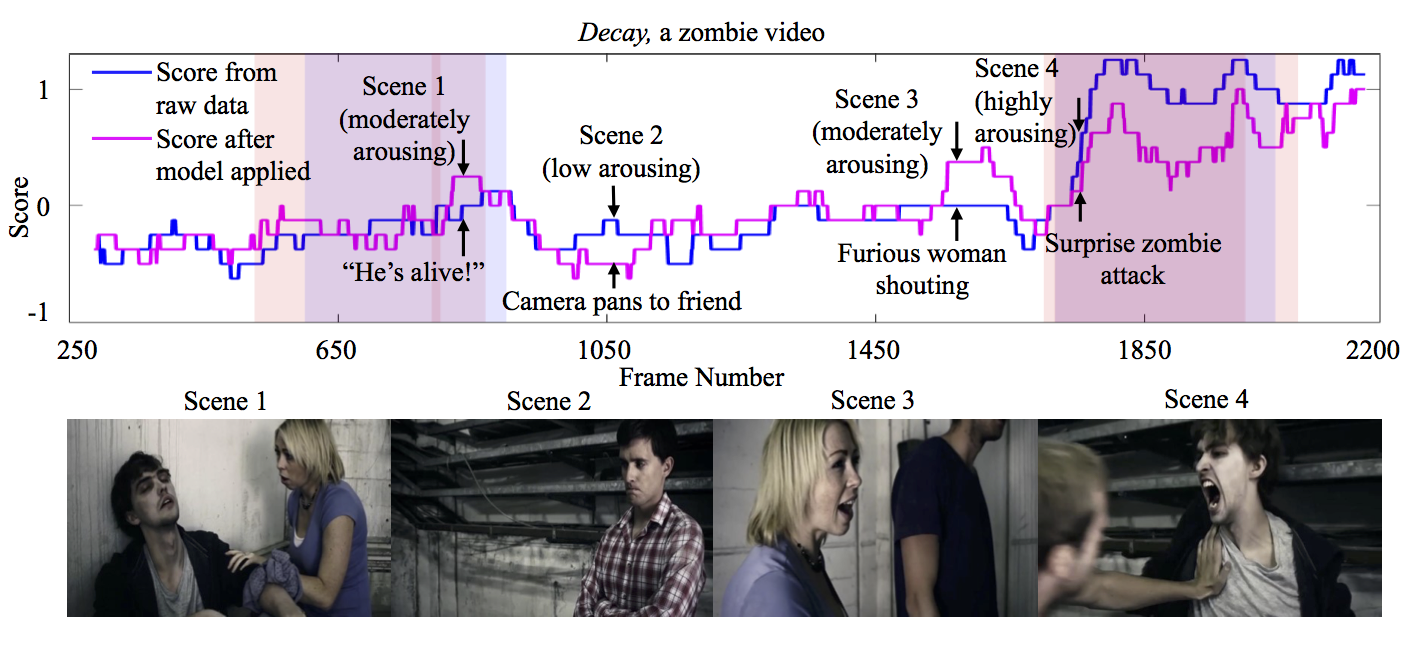

Example video with emotional responce plotted.

Decoupling Light Reflex from Pupillary Dilation to

Measure Emotional Arousal in Videos

“Decoupling Light Reflex from Pupillary Dilation to Measure Emotional Arousal in Videos”, Pallavi Raiturkar, Andrea Kleinsmith, Andreas Keil, Arunava Banerjee, Eakta Jain, ACM Symposium on Applied Perception (SAP), 2016.

- Paper (free download through ACM Author-Izer)

Decoupling light reflex from pupillary dilation to measure emotional arousal in videos

Pallavi Raiturkar, Andrea Kleinsmith, Andreas Keil, Arunava Banerjee, Eakta Jain

Decoupling light reflex from pupillary dilation to measure emotional arousal in videos

Pallavi Raiturkar, Andrea Kleinsmith, Andreas Keil, Arunava Banerjee, Eakta Jain

SAP ’16 Proceedings of the ACM Symposium on Applied Perception, 2016 - Paper (preprint) (PDF – 1.8MB)

- Code (ZIP)

- Submission video (MP4 – 22.4MB)

- Bibtex entry:

@inproceedings{raiturkar2016,

author = {Raiturkar, Pallavi and Kleinsmith, Andrea and Keil, Andreas and Banerjee, Arunava and Jain, Eakta},

title = {Decoupling Light Reflex from Pupillary Dilation to Measure Emotional Arousal in Videos},

booktitle = {Proceedings of the ACM Symposium on Applied Perception},

year = {2016},

pages = {89–96},

doi = {10.1145/2931002.2931009}

}

Measuring Viewers’ Heart Rate Response to Environment Conservation Videos

“Measuring Viewers’ Heart Rate Response to Environment Conservation Videos”, Pallavi Raiturkar, Susan Jacobson, Beida Chen, Kartik Chaturvedi, Isabella Cuba, Andrew Lee, Melissa Franklin, Julian Tolentino, Nia Haynes, Rebecca Soodeen, and Eakta Jain, ACM Symposium on Applied Perception (SAP), 2016.

- Poster Abstract (PDF)

- Code & Data (ZIP)

- Bibtex entry:

@inproceedings{Raiturkar:2016:MVH:2931002.2948724,

author = {Raiturkar, Pallavi and Jacobson, Susan and Chen, Beida and Chaturvedi, Kartik and Cuba, Isabella and Lee, Andrew and Franklin, Melissa and Tolentino, Julian and Haynes, Nia and Soodeen, Rebecca and Jain, Eakta},

title = {Measuring Viewers’ Heart Rate Response to Environment Conservation Videos},

booktitle = {Proceedings of the ACM Symposium on Applied Perception},

series = {SAP ’16},

year = {2016},

pages = {138–138},

doi = {10.1145/2931002.2948724},

url = {http://doi.acm.org/10.1145/2931002.2948724}

}

Scan Path and Movie Trailers for Implicit Annotation of Videos

“Scan Path and Movie Trailers for Implicit Annotation of Videos”, Pallavi Raiturkar, Andrew Lee, and Eakta Jain, ACM Symposium on Applied Perception (SAP), 2016.

- Poster Abstract (PDF)

- Code & Data (ZIP)

- Bibtex entry:

@inproceedings{Raiturkar:2016:SPM:2931002.2948723,

author = {Raiturkar, Pallavi and Lee, Andrew and Jain, Eakta},

title = {Scan Path and Movie Trailers for Implicit Annotation of Videos},

booktitle = {Proceedings of the ACM Symposium on Applied Perception},

series = {SAP 16},

pages = {141–141},

doi = {10.1145/2931002.2948723},

year = {2016},

url = {http://doi.acm.org/10.1145/2931002.2948723}

}

Copyright notice

© 2016 Copyright is held by the owner/author(s). Publication rights licensed to ACM.